I’ve been involved in a learning experiment these past six weeks. Now that it’s winding down, I thought I’d reflect a bit on some themes that emerged.

For the past 9 months or so, I’ve been taking classes online from Coursera to complete a Cybersecurity specialization taught by the University of Maryland. I’ve learned about security and usability, various flavors of software vulnerability, secure integrated circuit design, digital watermarks, and encryption theory.

In early May I began the final class in the sequence–a capstone project where teams of students attempt to build secure software to match a spec, then try to break one another’s submissions with a combination of pen testing, static code analysis, fuzzers, and theory taught in our other security courses. The project is framed as an international coding/testing competition hosted on builditbreakit.org (hence the “bibifi” in the title of this post), and this May’s running of the contest includes several hundred very sharp participants from around the world.

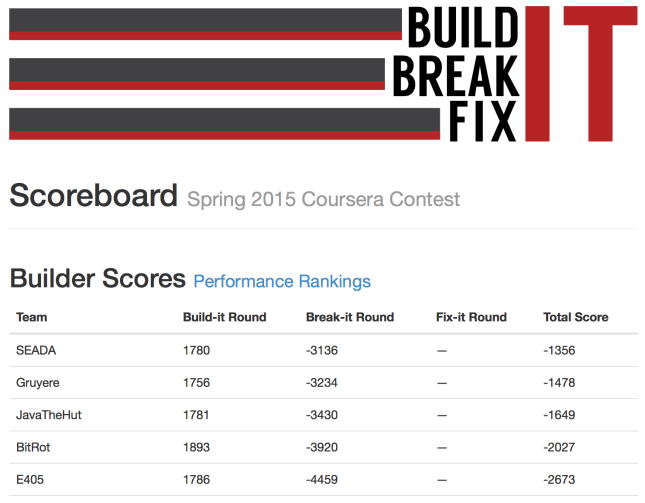

Partial bibifi scoreboard, showing 5 of about 100 teams. I was on team “SEADA”. Net of score in buildit round minus bugs logged against code in breakit round shows current overall standings.

We began by reading a spec for some cmdline programs with interesting security features. We were free to implement these programs using whatever programming languages and third-party libraries we liked. All submissions were automatically compiled by the contest infrastructure, and evaluated against an oracle on a reference VM that we downloaded. We had about two weeks to code. After passing a series of acceptance tests, submissions were scored for the presence of some optional features, and for performance (speed of execution and size of data, weighted equally). This gave each team an initial “buildit” score.

Then we were allowed to see one another’s source code, and to submit bugs against other teams. Bugs could be about correctness (team X didn’t implement the spec right), exploitable crashes, integrity violations (where an attacker could modify system state without knowing a password), or confidentiality problems (where an attacker could discover system state without knowing a password). Security-related bugs were worth more points than simple correctness bugs. Bugs were only accepted if an automated system determined that a given team’s software behaved differently than the oracle implementation. Each time we submitted a bug against a team, and it was accepted, we added points to our “breakit” score and subtracted points from the team’s “buildit” score.

Finally, we received the bug reports for our software, and had a chance to fix them. Each time we submitted a fix, the system re-evaluated all outstanding bugs; if a particular fix eliminated three bugs, then the bugs were deemed to be duplicates of one another. Teams that submitted such bugs had their breakit scores adjusted so the awarded points were divided by the amount of duplication, and buildit scores were credited back all but one bug’s worth of points.

I don’t yet know what the final scores will be, because some adjustments are still pending. However, our team was doing well in both the buildit and breakit rounds, and I’m confident that I’ve learned some good lessons already. Here are some points to ponder:

- During the breakit round, it quickly became clear that the gap between good code and bad code was substantial. The entries with staying power (few bugs) tended to be software that was already near the top in the buildit round (good performance, full features). The bug counts for the top teams were maybe 1/10th of the bug count for the bottom teams (even though each team could only submit 10 bugs against any single competitor, so the weak teams were artificially insulated).

- I saw little correlation between programming language and success. Entries were written in all sorts of languages: Go, Java, C, F#, C#, Haskell, Erlang, PHP, Perl, OCaml. One of the best entries I saw was written in Python–and so was one of the worst.

- However, I did see a strong correlation between unit tests and success. The best teams had clearly spent time anticipating problems, and proving they handled them. I didn’t find security bugs in any submissions from teams that had meaningful unit tests.

- I also saw a correlation between language mastery and quality. The best entries were written by people who loved their programming language and knew its subtleties. Among top teams, I saw idiomatic Python and Java and Go that would have made van Rossum or Gosling or McCabe proud. On the other hand, lesser entries seemed utilitarian or slipshod–not invested in their work. This matches what I’ve observed over decades in the industry: finding the ideal tool might not matter so much, but finding people who are passionate about craftsmanship with tools they’ve mastered–that matters a lot.

- Not all automation is justified, especially on a very short project like ours. However, test automation rocks. On day two of the breakit phase, one team’s score started climbing; quickly it was 10x, then 50x higher than competitors. How were they finding dozens or even hundreds of bugs per hour? When we completed our team’s test automation tools and turned them on, the answer became obvious. Many bugs manifest across scores of teams; once we could probe all competitors’ code in bulk, our bug counts went through the roof as well. These test automation tools also paid off spectacularly during the fixit phase, because they allowed us to quickly identify which bugs we had eliminated with a single code change. Xkcd comics have a lot of truth in them, which is why they’re so funny–but in this case, automation paid off…

image credit: xkcd.com

image credit: xkcd.com - Repeated cycles of

imperfect coding -> testing -> bugfixingproduces better software thanexhaustively studied spec + careful coding. This was obvious from the oracle, which (surprisingly) had many bugs–including obvious stuff like not validating a range of integers correctly. We learned that the staff had written the oracle just before the contest, and although they had lots of time to ponder the spec, they had not had time for a bugfix cycle. Folks, you don’t get quality software on the first try. Evah! - Programming is a team sport. Some of the “teams” were actually individual students. I don’t know how they fared, as a whole, but I know that our team benefitted from the complementary strengths of multiple people. I am not the world’s greatest pen tester, and some of the encryption theory we studied made my head hurt–but I know a thing or two about robust software development processes. We helped each other, and did better as a result. See my recent post about the myth of the “rockstar developer”.

How about you? Have you ever had an experience like the contest I describe here? What did you learn from it?

Nice information. Thanks for sharing